At the World Summit AI Americas 2020 Online, Internet Association (IA) Director of Social Impact Policy and Counsel Sean Perryman participated in a panel titled “What Will The Increasing Use Of AI For Profiling And Tracking Mean For Privacy And Bias?”

Perryman joined moderator Fiona McEvoy, a Tech Ethics Researcher/Writer and Founder of YouTheData.com as well as panelists including Partnership on AI Research Scientist Alice Xiang, American Civil Liberties Union (ACLU) Senior Policy Analyst Jay Stanley, and Electronic Frontier Foundation Staff Technologist Mona Wang.

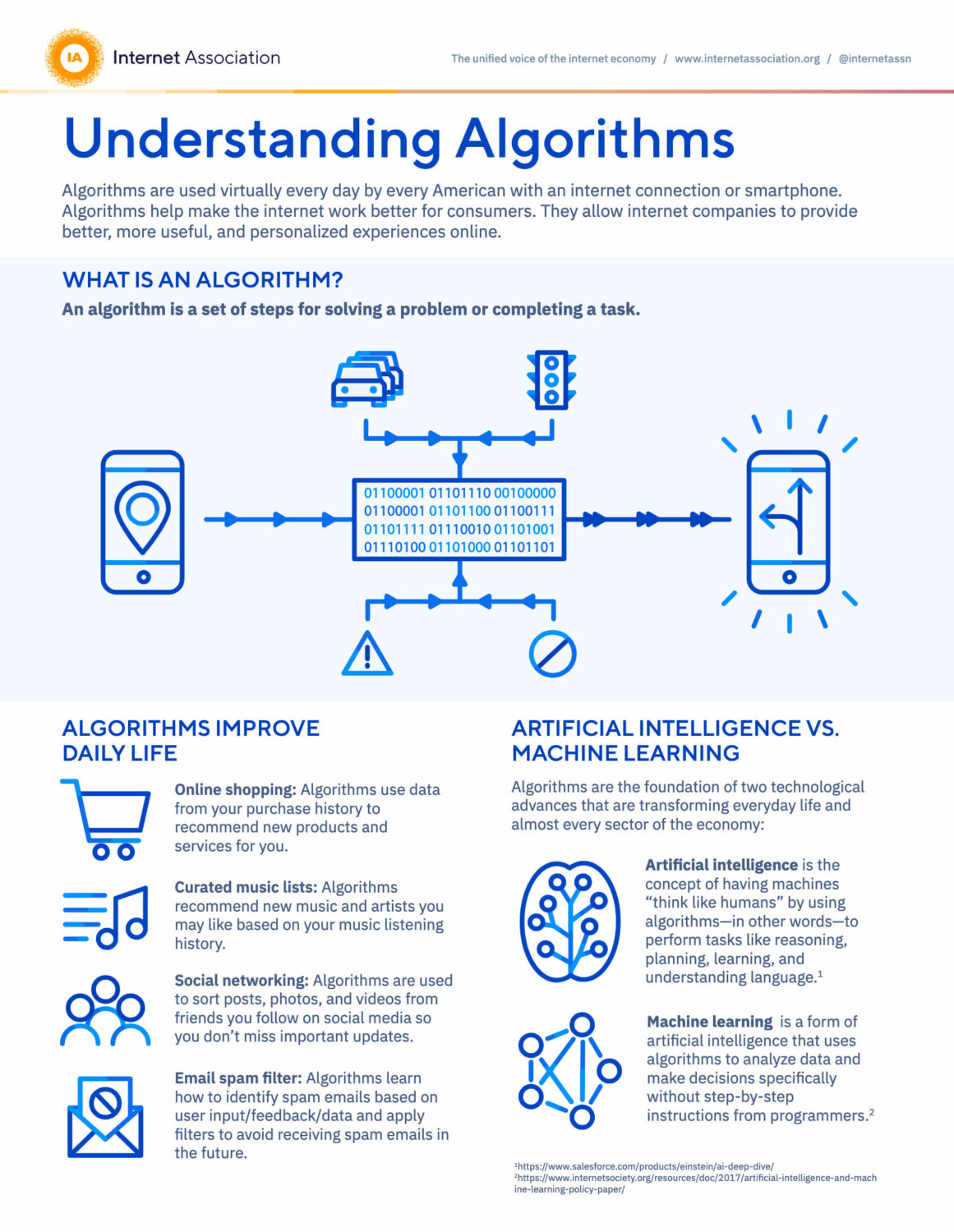

The panel started with a discussion about how artificial intelligence (AI) is used today and the importance of deploying this technology ethically. The group discussed algorithmic bias and contextual importance when using AI. Xiang weighed in on the use of AI in more consequential areas such as the criminal justice system as one example of the importance of context when determining AI deployment. IA’s Sean Perryman agreed with Xiang, highlighting that explainability is needed to build public trust in AI, particularly in high risk situations.

Explainability really is at the heart of this – that a person understands how their data is being used, whether or not AI is making a decision, and how that decision is reached. I think all of that builds public trust, but then it also helps solve some of these problems of unfairness that we’re seeing.

Sean Perryman

Perryman went on to explain that most non-technical institutions adopting AI technology do not understand that the algorithms they are implementing have biases (e.g. courts adopting sentencing software) but actually view them as neutral technology that guarantee fair results. He advocated for better practices to educate institutions on the limits and restrictions of current AI technology in order to avoid bias in algorithmic decisions.

The conversation then moved to the current policy landscape and whether or not there should be a blanket ban on the use of AI technologies. Stanley pointed out that currently there is no outright ban on the use of the technology. It will be even more challenging to enforce blanket bans for individuals as the use of AI increases, though some state-level laws exist today limiting government’s use of AI. Perryman expanded on that point by discussing the lack of consistency in how AI technology is deployed. There was, and still is, little transparency on how AI and the data collected are used. Perryman elaborated that inconsistencies with implementation and data usage could be mitigated with federal privacy legislation.

Right now, just depending on what city or state you’re in, the rules of the road are different depending on where you’re at, and often they don’t apply to law enforcement at all.

Sean Perryman

Perryman was then asked if AI-driven technologies can be used in an ethical way to surveil people. He emphasized that these technologies can be used ethically. However, when it comes to privacy, it is important to make the distinction that ongoing, continuous surveillance with AI is distinct from brief interactions with surveillance technology because data can be constantly compiled, and it is unclear how that data is used and shared.

When we speak about facial recognition, we are really talking about how that data is being used. That’s why I’m always saying we have to tie this to the conversation about federal privacy legislation.

Sean Perryman

Finally, the panelists were asked about their biggest concern with AI as it pertains to privacy and bias. Perryman highlighted the intersection of algorithmic bias with data use. He emphasized the way to ensure equitable uses of AI is by having comprehensive federal privacy legislation that protects people’s data and sets an economy-wide national standard for companies.

Federal privacy legislation, having some sort of consistent framework for how we handle people’s data…and making sure that surveillance isn’t used in poor, black, and brown neighborhoods, and meanwhile the more rich, and white neighborhoods are getting the beneficial uses of that technology [are big concerns].

Sean Perryman